Interview Smart Contract Engineers: Evaluate Real Solidity Skill

Most Web3 founders rethink their hiring approach only after they’ve made a painful mis-hire.

And in smart contract engineering, that mistake doesn’t just slow down delivery — it changes how the entire team functions.

A wrong hire shows up quickly: QA cycles stretch, auditors lose confidence, senior engineers become firefighters, and release windows tighten.

The surprising part? Most of these issues are visible during the interview process, but only if the interview is designed to reveal them.

The real problem is simple:

Most founders end up guessing a candidate’s true ability because their interviews measure memorization, not engineering judgment.

This guide lays out a practical, founder-friendly approach to interviewing smart contract engineers in a way that removes guesswork.

you’re a non-technical founder trying to hire Solidity developers for DeFi or you’re working with a web3 recruitment agency for startups, this is a simple, repeatable smart contract engineer interview process to evaluate real skill without relying on trivia.

It reflects patterns used by strong L1, L2, and DeFi teams — designed for real hiring constraints: limited senior time, remote collaboration, and high-stakes production risk.

TL;DR

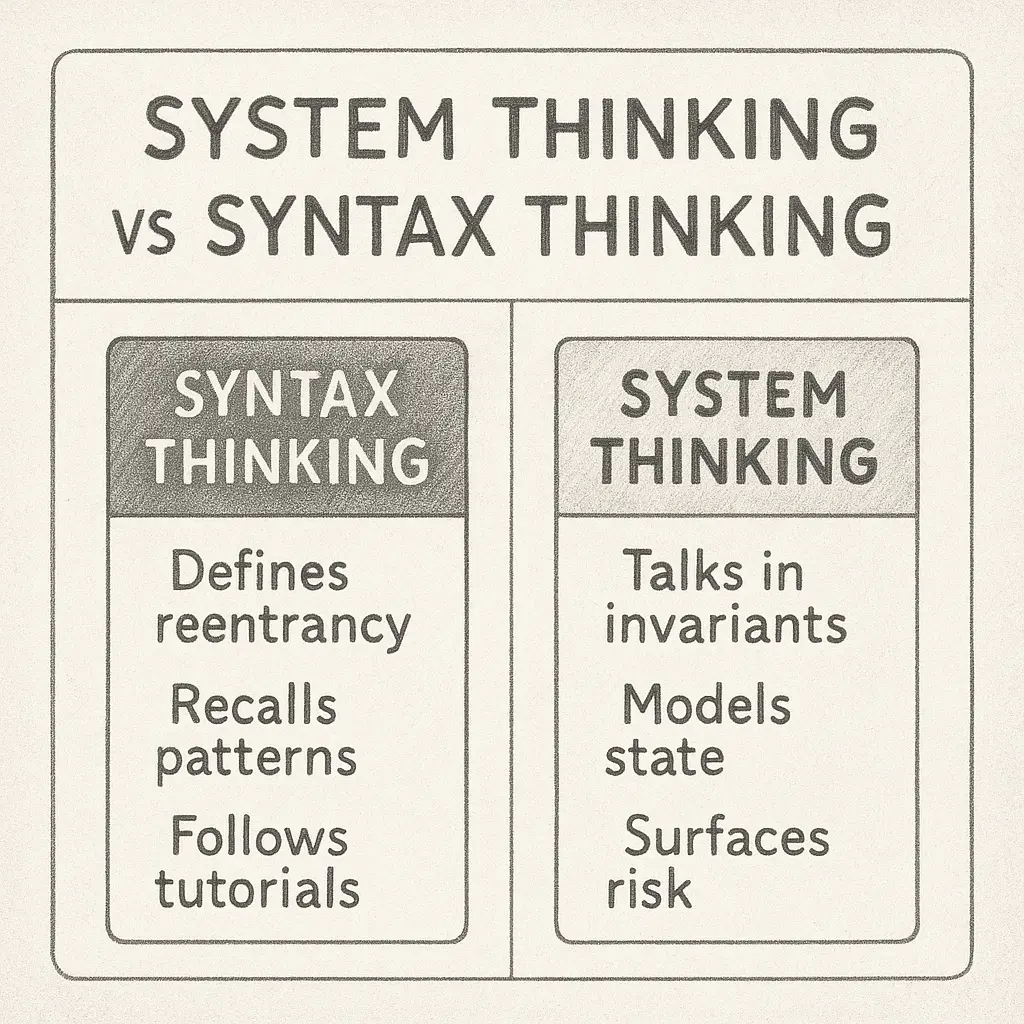

Most interviews test syntax instead of engineering judgment — that’s why mis-hires happen.

Instead of guessing ability, focus on reasoning, debugging, testing mindset, and ownership.

A more human, conversation-driven approach reveals real production readiness.

Why Traditional Interviews Fail for Smart Contract Roles

Most general engineering interviews rely on algorithm puzzles, system design, or general coding fluency.

Smart contract engineering requires all of that plus:

reasoning about invariants

recognizing security surfaces

modeling financial incentives

debugging non-deterministic failures

designing preventive tests

understanding upgrade safety and storage layout

Because the environment is adversarial and often irreversible, you must evaluate whether a candidate can think safely under uncertainty — not whether they can recall definitions or syntax.

The AOB community’s Smart Contract Interview Prep Hub reinforces this: real production engineers are differentiated by how they reason, not what they memorize.

Smart Contract Engineer Interview Process (4 Steps)

Below are the four conversation styles that separate real engineers from those who only appear strong on paper.

These are not rigid “stages” — they’re ways to observe thinking clearly and consistently.

1. Start With a Simple Reasoning Discussion (30 minutes)

Goal: Understand how they think, not how much they’ve memorized.

Instead of asking “Explain reentrancy,” try:

“Walk me through what happens in this function — from storage → execution → post-state.”

This prompt reveals whether they naturally talk about:

invariants

assumptions

risk boundaries

state transitions

external call concerns

If they describe the code line-by-line but never mention the system, that’s a strong indicator they’re not yet production-ready.

This is the same gap highlighted in AOB’s

Gas pitfalls juniors mention vs what interviewers actually assess — useful for calibrating why “sound smart” often ≠ production readiness.

Add a light ambiguity test

Ask:

“This contract works, but something feels unsafe. What concerns you?”

Strong candidates immediately call out missing invariants, unsafe ordering, or unclear permissions.

Weak candidates ask, “Where is the bug?” — revealing they cannot explore risk without hints.

2. Give a Small Debugging Exercise (45–60 minutes)

Goal: See how they behave during incidents.

Smart contract debugging shows you how someone thinks when money is at risk. Give them a realistic, broken contract and let them:

reproduce the issue

model the state transition

identify root cause

explain the failure mode

propose a fix + trade-offs

Strong engineers validate invariants, test assumptions, consider storage layout, and think aloud.

Weak ones jump to patching or rely on logs as truth — a problem highlighted often in AOB’s

Related Discussuin

Debugging Smart Contracts Is Tough — How Do You Make It Easier?

If you want further depth, you can cross-reference their approach with workflows from AOB’s

Solidity Debugging Tooling Hub, which mirrors how senior engineers actually debug in production.

3. Watch How They Think About Testing (45 minutes)

Goal: Evaluate preventive thinking before coding.

Smart contract engineering is mostly testing, not writing code.

Start with:

“Before writing any tests, tell me what you would test.”

Strong candidates will talk about:

invariants

forbidden behaviors

edge-case transitions

stale state scenarios

adversarial inputs

Then ask them to write one focused test.

You’re not measuring syntax — you’re measuring how they protect the system.

Related hub:

Smart Contract QA Testing Hub (great for building test-signal questions).

Weak indicators include:

starting with happy-path tests

copying template patterns

chasing coverage instead of correctness

4. Test Ownership and Autonomy (30–40 minutes)

Goal: Ensure they can work in async, high-risk Web3 teams.

Most smart contract teams are globally distributed, lightly managed, and constantly working near production boundaries, which is why “ownership signals” matter when you build a web3 engineering team.

Ownership matters as much as technical skill.

Ask how they handle blockers

“When you're blocked in an async environment, what do you do?”

Strong candidates talk about:

writing reproducible states

proposing fallback options

communicating high-context summaries

unblocking through assumptions

avoiding silent waiting

Related Thread:

How Do You Manage Time Zone Differences in Remote Blockchain Jobs?

Ask about incident accountability

“Tell me about a production issue you caused or resolved. What changed afterward?”

You’re looking for:

humility

learning trajectory

root-cause awareness

process improvement thinking

Related:

How do you rebuild clarity in a smart contract team when owner-driven chaos creeps in?

If a candidate deflects blame or focuses only on technical symptoms, they will struggle in distributed teams.

A Simple Way to Remember the Approach

You’re not testing what candidates know.

You’re testing how they think when the system moves, how they react when it breaks, and how they communicate when uncertainty appears.

If your interview surfaces reasoning, debugging maturity, testing habits, and ownership behaviors, you won’t have to guess their real ability.

Smart Contract Engineer Red Flags That Predict Mis-Hires

Pay attention if a candidate:

only describes syntax, not systems

cannot articulate assumptions

answers questions only after hints

never mentions invariants during tests

freezes when facing ambiguity

patches code without modeling global impact

These signals override impressive GitHub repos, audit badges, or big-company backgrounds.

Behavior beats credentials in Web3 hiring.

Leveling guide (what “good” looks like by seniority)

Junior: spots obvious risks, writes one strong negative test, explains trade-offs simply.

Mid-level: models deeper state transitions, names invariants, proposes fixes with pros/cons.

Senior: anticipates failure modes, considers upgrade/storage safety, improves process after incidents.

If you’re trying to hire a blockchain developer fast, this section reduces mis-leveling more than any trivia question.

Why This Method Works

Smart contract engineering operates in a high-stakes environment where the cost of mistakes is real.

The engineer you hire shapes how safely your team can ship — and how confidently your product moves on-chain.

This interview style works because it evaluates:

clarity under incomplete information

safety-first decision-making

reasoning through state changes

debugging under pressure

preventive testing habits

capacity for async ownership

It replaces guesswork with genuine insight.

If you're a founder hiring smart contract engineers — or a candidate preparing for deeper technical conversations — explore roles that value reasoning over buzzwords:

If you’re hiring across web3 smart contract roles, or exploring what strong teams screen for, browse roles and hiring signals here:

https://artofblockchain.club/blockchain-developer-jobs

A simple scorecard (so hiring isn’t based on “vibes”)

If you’re scaling a team or using a web3 hiring partner, consistency matters. Use this 4-signal scorecard after every interview:

Reasoning (0–2): Do they naturally name assumptions, invariants, and risk boundaries?

Debugging (0–2): Do they model state transitions and isolate root cause before patching?

Testing mindset (0–2): Do they think in forbidden behaviors and adversarial inputs—not just happy paths?

Ownership (0–2): Do they communicate clearly in async environments and show accountability in incidents?

This keeps your web3 talent acquisition strategy repeatable even when multiple people interview.

FAQs

1. What should founders look for when interviewing smart contract engineers?

Focus on their reasoning process, debugging habits, assumptions, and how they handle ambiguity.

2. Why don’t traditional interviews work for Solidity roles?

They miss invariants, security surfaces, financial risks, and state-transition reasoning.

3. How can you test a Solidity developer’s real skill?

Use simple reasoning prompts, debugging discussions, and testing scenarios that reveal their thinking.

4. Should candidates prepare differently for smart contract interviews?

Yes — they should practice reasoning, debugging workflows, and designing preventive tests.

5. How do you know if a Solidity developer can work safely in production?

Look for clear communication, accountability, and the ability to reason under uncertainty.

6. Do memorized concepts matter in smart contract interviews?

Only minimally. What matters is whether the candidate can identify risks, assumptions, and invariants.

7. Why do strong engineers stand out quickly in this interview style?

Because they naturally think in systems, simulate risk, and communicate with clarity.