A Clear Framework for Debugging Solidity Errors That Keep Reappearing in Interviews

Disclaimer: This guide covers debugging patterns commonly evaluated in Solidity interviews and early-career smart contract roles.

It is not a complete security audit methodology.

Different teams and advanced audit firms may use deeper or more specialized techniques.

Most blockchain developers don’t fail Solidity interviews because they lack syntax knowledge.

They struggle because their debugging approach is missing, inconsistent, or overly reactive.

Interviewers often present a small, intentionally flawed contract and expect candidates to:

identify the underlying issue

ask clarifying questions

reason through the logic

simulate state transitions

test their assumptions

describe risk boundaries

Yet many candidates jump straight to “fixing the code” without understanding why the bug exists.

Debugging questions quietly influence interview outcomes because they reveal whether a developer can operate safely on testnets, in production, and under time pressure.

This blog offers a repeatable debugging framework you can use in:

smart contract interviews

take-home assignments

real-world incidents

day-to-day development

internal reviews

It also helps reduce the “freeze moment” developers experience under ambiguity or pressure.

TL;DR

Debugging interviews test reasoning, not syntax.

This 6-step framework helps you demonstrate structured, security-first thinking.

Strong debugging signals trust — a major hiring advantage.

Why Interviewers Ask Debugging Questions

Debugging reveals how a developer thinks.

Founders and hiring managers use debugging exercises to evaluate:

your mental model of the EVM

your understanding of state vs. logs

how you handle ambiguity

whether you can isolate root causes

whether you test before trying to fix

your awareness of real-world risks

Debugging is not “fix that line.”

It’s essentially:

“Can you keep the system safe when something breaks?”

This insight aligns with discussions across AOB, including:

Debugging Smart Contracts Is Tough — How Do You Make It Easier?

https://artofblockchain.club/discussion/debugging-smart-contracts-is-tough-how-do-you-make-it-easier

Candidates who show structured debugging stand out immediately.

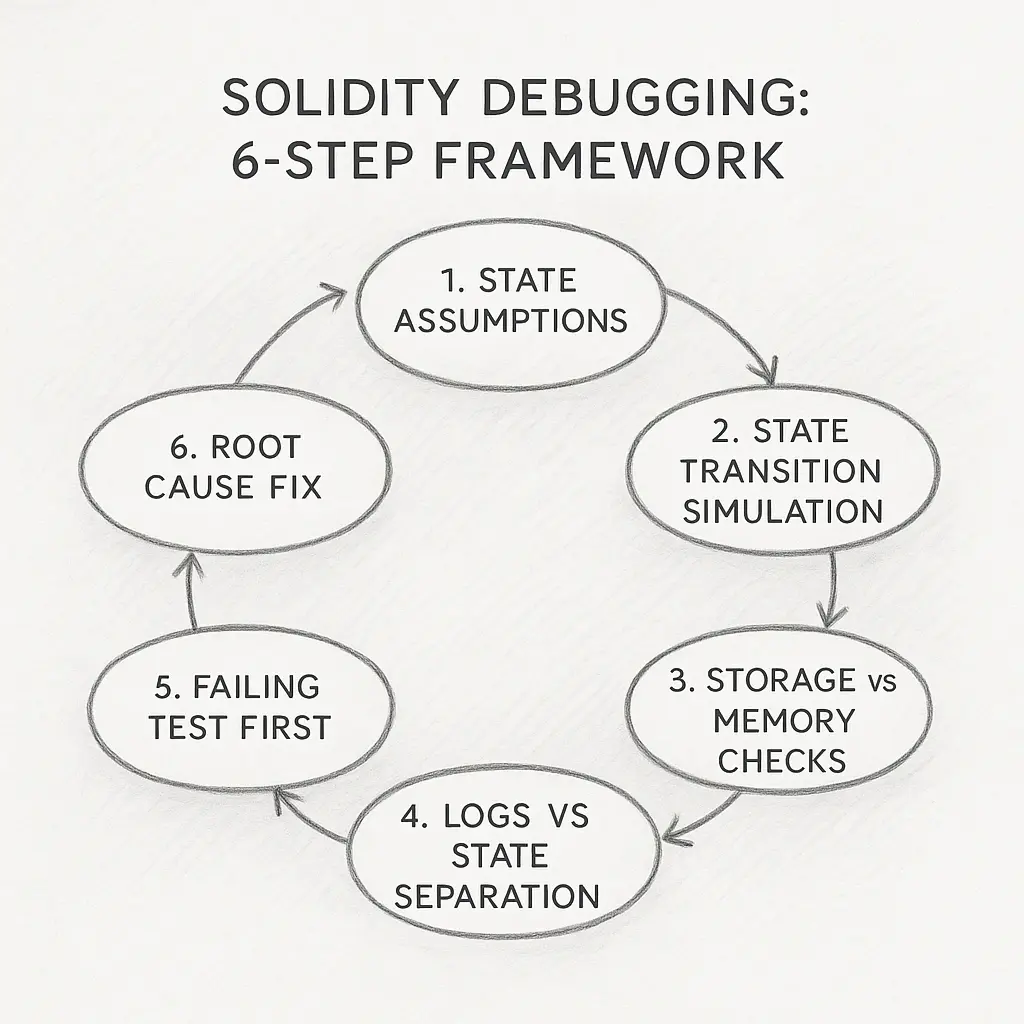

A Practical 6-Step Debugging Framework for Solidity Interviews

This framework reflects how many senior engineers articulate their reasoning during interviews.

You can apply it to most debugging scenarios you encounter.

Step 1 — Pause and State Your Assumptions Before Touching Code

Most juniors dive into “fix mode.”

Interviewers may view this as a maturity gap.

Start by saying:

“Let me restate what this function appears to be designed to do.”

“This seems to be the main invariant.”

“These are the moving parts I notice.”

“Should this call be state-changing or not?”

Assumption-aware engineers tend to prevent more issues than those who move fast without clarity.

Step 2 — Simulate the State Transition, Not the Code Flow

Many bugs become clear only when you track state before and after execution.

Example:

function withdraw() external {

uint amount = balances[msg.sender];

require(amount > 0);

(bool ok,) = msg.sender.call{value: amount}("");

require(ok);

balances[msg.sender] = 0;

}

Common weak answer:

“Missing reentrancy guard.”

Stronger reasoning:

“State is updated after the external call.”

“An attacker could reenter with stale state.”

“The invariant ‘balance must be zeroed before transfer’ breaks.”

This aligns with reasoning patterns discussed in:

Silent Fails in Smart Contract Access Control

https://artofblockchain.club/discussion/silent-fails-in-smart-contract-access-control-what-teams-miss-until-its-too

Step 3 — Check Storage vs Memory vs Calldata Assumptions

Interviewers often hide subtle issues around:

memory copies

unintended storage mutations

array growth

expensive loops

calldata handling

Example:

struct User { uint age; }

mapping(address => User) users;

function update(User memory u) external {

users[msg.sender] = u;

}

Useful questions to speak aloud:

“Should this input be memory or calldata?”

“Should we validate struct fields?”

“Does this maintain expected invariants?”

This signals a security-first mindset valued in L1/L2, rollups, and DeFi teams.

Step 4 — Reproduce the Bug Mentally Using Logs + State Separation

A common mistake is treating logs as state.

But:

Logs ≠ storage

Events ≠ guarantees

Simulated trace ≠ on-chain trace

Interviewers may add bugs that only appear when you separate:

“What events show” vs “What the contract has actually stored.”

Common triggers include:

unsafe delegatecall usage

incorrect proxy storage slots

incorrect assumptions about msg.value

This approach mirrors patterns discussed in:

What Should a Web3 Product Ops Team Monitor After a Mainnet Release?

https://artofblockchain.club/discussion/which-post-launch-metrics-should-web3-product-ops-monitor-after-a-mainnet-release

Step 5 — Test the Failure Before Suggesting a Fix

Strong candidates say:

“Before fixing, I want to write a failing test that reproduces the issue.”

This shows:

reproducibility

discipline

audit-style thinking

regression prevention

clarity of ownership

Common failing tests include:

underflow/overflow triggers

stale state reentrancy triggers

invalid input shapes

For more patterns:

Smart Contract QA Testing Hub

https://artofblockchain.club/discussion/smart-contract-qa-testing-hub

Step 6 — Fix Only After Understanding the Root Cause

Interviewers pay attention to whether you fix symptoms or solve root patterns.

Weak fix:

“I moved this require.”

Stronger fix:

“The invariant breaks because the external call precedes the state update.

Possible fixes include reordering operations, adding a guard, or adjusting the withdrawal model.”

Weak fix:

“I changed uint to uint256.”

Stronger fix:

“Integer truncation risk arises when user-controlled inputs exceed expected width.

We should validate bounds or enforce inputs.”

Weak fix:

“I added a modifier.”

Stronger fix:

“Access control fails because authorization is evaluated after a state change.

It should be validated before any sensitive operations.”

This aligns with discussions like:

How Do You Rebuild Clarity in a Smart Contract Team When Owner-Driven Chaos Sets In?

https://artofblockchain.club/discussion/how-do-you-rebuild-clarity-in-a-smart-contract-team-when-owner-driven

Why This Framework Works

This sequence mirrors real workflows across:

audits

incident triage

protocol engineering

QA testing

pre-deployment reviews

mainnet monitoring

bug bounty work

Interviewers often view structured debugging as a strong proxy for real-world performance.

What Founders Learn About Your Skills When You Demonstrate Strong Solidity Debugging

Effective debugging suggests that you:

avoid unsafe code

think clearly under pressure

understand unstated assumptions

collaborate well with auditors

support incidents responsibly

reduce load on senior engineers

Strong debugging creates trust, which speeds up hiring decisions.

Common Debugging Mistakes to Avoid

These patterns often signal inexperience:

❌ Fixing issues without understanding the cause

❌ Confusing logs with actual state

❌ Skipping clarifying questions

❌ Ignoring invariants

❌ Over-focusing on syntax

❌ Freezing under ambiguity

Interviewers look for clarity — not perfection.

CTA

If you're preparing for Solidity interviews or targeting roles where debugging depth matters, explore AOB’s curated job listings — updated daily with high-signal smart contract opportunities:

👉 AOB Blockchain Job Board

https://artofblockchain.club/job/

FAQs

1. Why do Solidity interviewers focus on debugging exercises?

Because debugging reveals your reasoning style, understanding of the EVM, and ability to prevent real incidents.

2. How do I practice state-transition simulation for interviews?

Use small flawed contracts, track before/after state, and speak through the invariant.

3. What mistakes do new Solidity developers make during debugging?

Fixing too quickly, confusing logs with state, skipping clarifying questions, and ignoring invariants.

4. How do I show structured debugging in an interview?

State assumptions, simulate state transitions, check storage/memory behaviors, reproduce the issue, then propose fixes.

5. Why is writing a failing test important before fixing a bug?

It ensures reproducibility, prevents regressions, and reflects real audit workflows.

6. How can I debug reentrancy issues effectively?

Look at state-transition ordering, invariants, and where external calls occur.

7. What do founders infer when a candidate debugs well?

That the candidate can support incidents, collaborate with auditors, and avoid shipping risky code.

8. Are these debugging steps enough for real audits?

They’re sufficient for interviews and early-career roles, but advanced audits use deeper techniques as well.

Disclaimer

Always validate these debugging techniques against the documentation of the chain or protocol you're working with.

Different environments, compilers, and architectures may require additional considerations.